Research

KV Cache Compression

- Institution: The Hong Kong Polytechnic University

- Category: Machine Learning

- Highlights: Compression

- Term: Ongoing

Description:

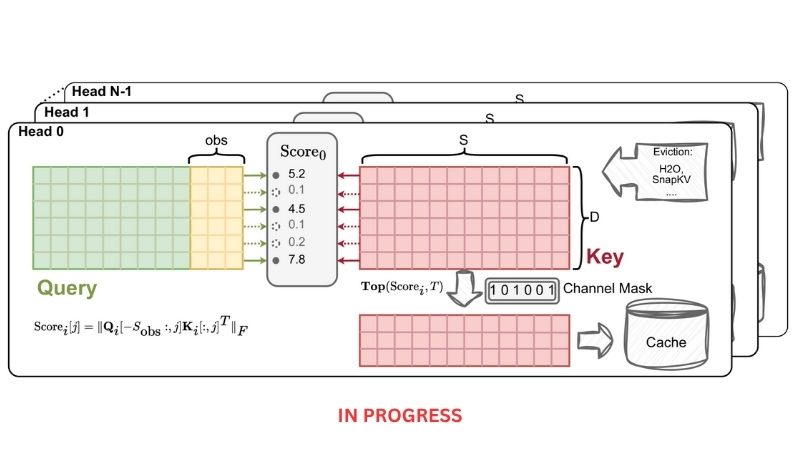

Attempting to integrate a new lossy compressor written in CUDA to compress cached Key Value data within the GPU architecture and reduce its size within the vllm library.

Link to vllm library: vllm - LLM interfacing & servicing